天工AI的智能体首页:

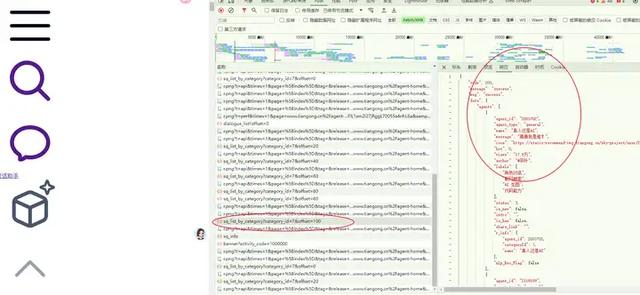

F12查看真实网址和响应数据:

翻页规律:

https://work.tiangong.cn/agents_api/square/sq_list_by_category?category_id=7&offset=0

https://work.tiangong.cn/agents_api/square/sq_list_by_category?category_id=7&offset=80

https://work.tiangong.cn/agents_api/square/sq_list_by_category?category_id=7&offset=100

网站返回的是json数据:

{

"code": 200,

"message": "success",

"msg": "success",

"data": {

"agents": [

{

"agent_id": "3193461",

"agent_type": "general",

"name": "假如科比参加欧洲杯",

"message": "我们的牢大回来了,他参加了欧洲杯,面临巨大挑战,运用你的智慧帮助牢大获得欧洲杯冠军吧!",

"icon": "https://static-recommend-img.tiangong.cn/ai-text-gen-image/agent-backgroud_9783755_1803007243774124032.jpg",

"hot": 0,

"views": "2107",

"author": "@Anria",

"labels": [

"生活娱乐"

],

"status": 3,

"is_new": false,

"intro": "",

"is_has": false,

"share_link": "",

"r_info": {

"agent_id": 3193461,

"categoryId": 7,

"name": "假如科比参加欧洲杯"

},

"nlp_Rec_Flag": false

},

在deepseek中输入提示词:

你是一个Python编程专家,完成一个Python脚本编写的任务,具体步骤如下:

在F盘新建一个Excel文件:tiangongaiagent20240619.xlsx

请求网址:

https://work.tiangong.cn/agents_api/square/sq_list_by_category?category_id=7&offset={pagenumber}

请求方法:

GET

状态代码:

200 OK

{pagenumber}的值从0开始,以20递增,到200结束;

获取网页的响应,这是一个嵌套的json数据;

获取json数据中"data"键的值,然后获取其中"agents"键的值,这是一个json数据;

提取每个json数据中所有键的名称,写入Excel文件的表头,所有键对应的值,写入Excel文件的数据列;

保存Excel文件;

注意:每一步都输出信息到屏幕;

每爬取1页数据后暂停5-9秒;

需要对 JSON 数据进行预处理,将嵌套的字典和列表转换成适合写入 Excel 的格式,比如将嵌套的字典转换为字符串;

在较新的Pandas版本中,append方法已被弃用。我们应该使用pd.concat来代替。

要设置请求标头:

Accept:

application/json, text/plain, */*

Accept-Encoding:

gzip, deflate, br, zstd

Accept-Language:

zh-CN,zh;q=0.9,en;q=0.8

App_version:

1.7.1

Channel:

Device:

Web

Device_hash:

38f97d9e16720d12bf57dd50fea53d02

Device_id:

38f97d9e16720d12bf57dd50fea53d02

Origin:

https://www.tiangong.cn

Priority:

u=1, i

Referer:

https://www.tiangong.cn/

Sec-Ch-Ua:

"Google Chrome";v="125", "Chromium";v="125", "Not.A/Brand";v="24"

Sec-Ch-Ua-Mobile:

?0

Sec-Ch-Ua-Platform:

"Windows"

Sec-Fetch-Dest:

empty

Sec-Fetch-Mode:

cors

Sec-Fetch-Site:

same-site

User-Agent:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36

源代码:

import requests

import json

import pandas as pd

import time

import random

# 设置请求头

headers = {

"Accept": "application/json, text/plain, */*",

"Accept-Encoding": "gzip, deflate, br, zstd",

"Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8",

"App_version": "1.7.1",

"Channel": "",

"Device": "Web",

"Device_hash": "38f97d9e16720d12bf57dd50fea53d02",

"Device_id": "38f97d9e16720d12bf57dd50fea53d02",

"Origin": "https://www.tiangong.cn",

"Priority": "u=1, i",

"Referer": "https://www.tiangong.cn/",

"Sec-Ch-Ua": '"Google Chrome";v="125", "Chromium";v="125", "Not.A/Brand";v="24"',

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": '"Windows"',

"Sec-Fetch-Dest": "empty",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Site": "same-site",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36"

}

# 初始化DataFrame

df = pd.DataFrame()

# 遍历页码

for offset in range(0, 201, 20):

print(f"正在爬取第 {offset // 20 + 1} 页数据...")

url = f"https://work.tiangong.cn/agents_api/square/sq_list_by_category?category_id=7&offset={offset}"

response = requests.get(url, headers=headers)

if response.status_code == 200:

data = response.json()

# 提取数据

agents = data['data']['agents']

for agent in agents:

flat_agent = {}

for key, value in agent.items():

if isinstance(value, (dict, list)):

flat_agent[key] = json.dumps(value)

else:

flat_agent[key] = value

df = pd.concat([df, pd.DataFrame([flat_agent])], ignore_index=True)

else:

print(f"请求失败,状态码: {response.status_code}")

# 随机暂停5-9秒

time.sleep(random.uniform(5, 9))

# 保存到Excel文件

excel_file = "F:/tiangongaiagent20240619.xlsx"

df.to_excel(excel_file, index=False)

print(f"数据已保存到 {excel_file}")