【[125星]Awesome-KV-Cache-Management:为大型语言模型(LLM)加速提供全面的KV缓存管理方案。亮点:1. 汇总了100多篇相关研究论文,涵盖最新技术进展;2. 提供丰富的代码链接,方便开发者快速上手;3. 持续更新,紧跟学术前沿】

'A repository that serves as a comprehensive survey of LLM development, featuring numerous research papers along with their corresponding code links.'

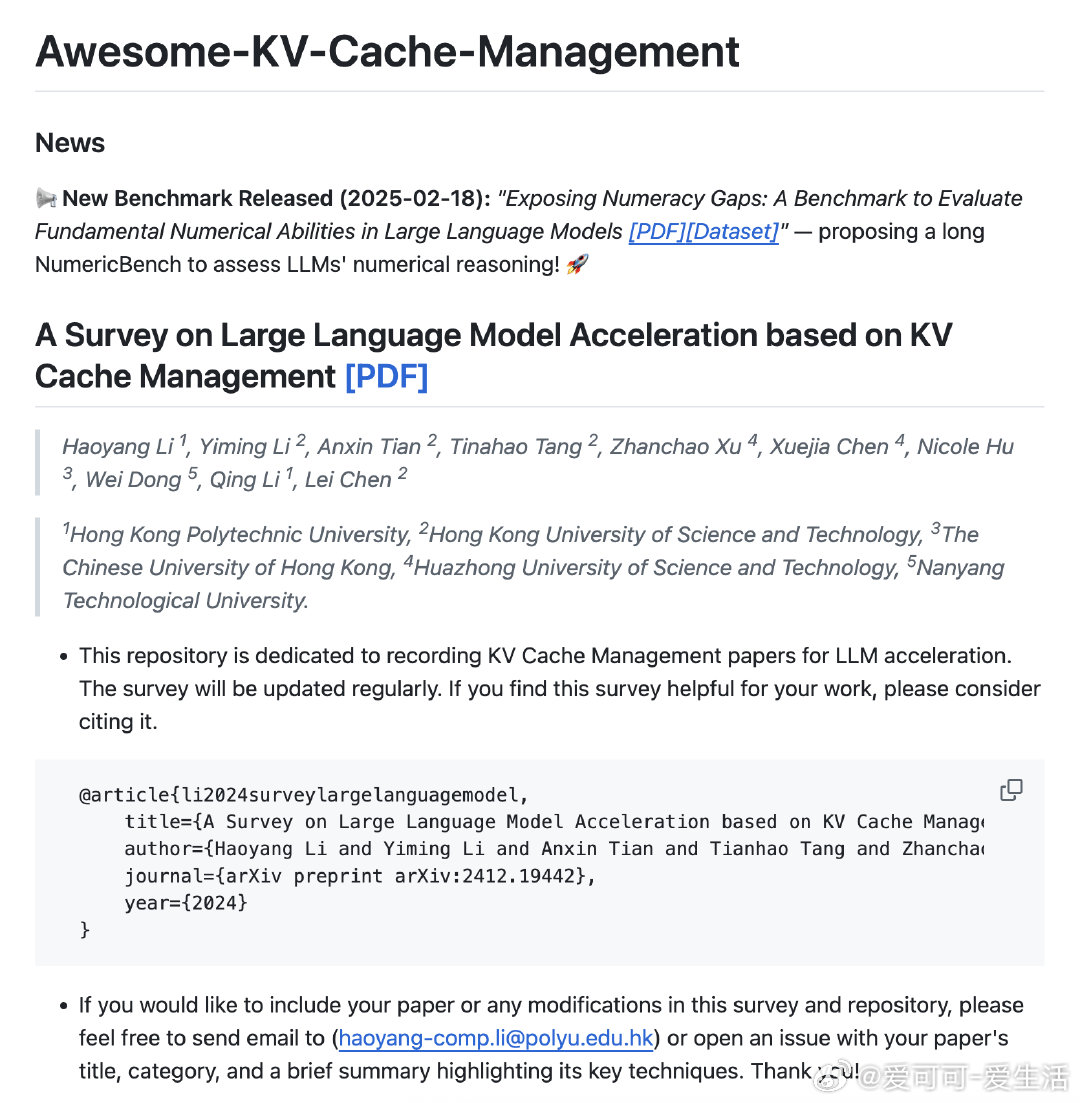

GitHub: github.com/TreeAI-Lab/Awesome-KV-Cache-Management

LLM加速 KV缓存 学术调研 AI创造营